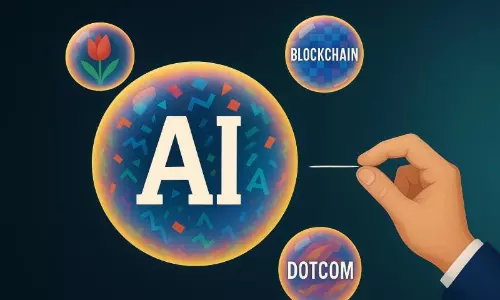

Gmail’s AI summaries may have security risk affecting 2 billion users

text_fieldsGoogle has been introducing new AI-powered tools to the Gmail mobile app, including a feature launched in June that uses Gemini to generate summaries of emails and lengthy threads. While the feature offers convenience, a recently discovered security vulnerability has raised concerns about its potential misuse.

Marco Figueroa, who manages Mozilla’s GenAI Bug Bounty Program, revealed that a security researcher uncovered a prompt injection flaw in Google Gemini for Workspace. This vulnerability could allow attackers to embed harmful commands within emails, which would only become active when a user clicks on the “Summarise this email” option in Gmail.

The technique involved crafting emails that included hidden prompts for Gemini. These were concealed in the message body using HTML and CSS tricks, such as setting the font size to zero and matching the text colour to the background, effectively making the malicious instructions invisible to the recipient, the Indian Express reported.

Since these emails contain no attachments, they are likely to evade Google’s spam filters and land directly in users’ inboxes. When a recipient opens such an email and uses Gemini to generate a summary, the AI tool ends up following the hidden instructions embedded in the message.

These concealed commands can prompt Gemini to display a phishing warning that appears to be from Google itself. Because the message comes through the AI summarisation tool, users may not question its authenticity, making the exploit particularly dangerous.

Marco Figueroa outlined potential countermeasures to detect and address such prompt injection attacks. One approach involves configuring Gemini to ignore or remove hidden content embedded in the email body. Another option is for Google to implement post-processing filters that examine Gemini’s output for red flags like urgent calls to action, phone numbers, or suspicious links and flag them for further scrutiny.

When BleepingComputer reached out to Google for comment, a spokesperson stated that some protective measures were already in place and others were being developed. The company added that although there is currently no evidence of this vulnerability being exploited in actual attacks, the research highlights that such manipulation is indeed feasible.

While Google has a strong track record of identifying and addressing security vulnerabilities, cybercriminals often remain one step ahead. Users are advised not to fully rely on AI-generated summaries and to carefully verify email content and links before engaging with them.